Cosmology (a short layman overview)

Cosmological model

According to the current cosmological model, the so-called Hot Big Bang model, the Universe as we know it today evolved from an extremely hot and dense initial state when the matter existed in a form of quark-gluon plasma. No atoms or atomic nuclei were present then, and protons, neutrons and electrons emerged only later on, when the Universe cooled off sufficiently. They coexisted with other species of elementary particles, in particular, photons and neutrinos in a state of the thermodynamic equilibrium. The primordial matter was distributed nearly homogeneously throughout the Universe. In the course of the evolution the Universe expanded and thus cooled down, gradually turning in to the state we observe today. First, when the temperature dropped down to roughly T=109K the atomic nuclei appeared as a result of the process usually referred to as nucleosynthesis. However, at that time only the nuclei of the lightest elements were created. The heavier elements had to wait for the appearance of the sufficiently evolved stars. Once the nucleosynthesis was over, the neutrinos became effectively independent from all the other components of the Universe interacting with them only via a weak gravitational force. The photons, however, kept on being tightly coupled to the still ionized matter due to electromagnetic interactions, such as Compton and Thomson scattering. This lasted only until the moment when the universal temperature reached down roughly T=4,000K. At that time, in the process referred to as recombination the nuclei and free electrons combined together creating electrically neutral atoms. As a result the Universe became essentially transparent to the primordial photons, which at that moment began their virtually unhinderd travel across the Universe. Some of those photons can be observed today. These are the photons which at the time of the recombination happened to be at such a distance from us, that it has taken them the time between the recombination and now to travel it. The observed primordial photons come therefore to us from a fictitious two-dimensional spherical surface of a radius given by such a distance, which is usually referred to as the Last Scattering Surface. Their properties are nearly direction independent - a consequence of the homogeneity of the early Universe - and correspond to that of a thermodynamic equilibrium - a consequence of the thermodynamic equilibrium of the early phases of the Unverse's evolution. Their effective temperature is appropriately lowered as a result of the expansion and is equal to T0=2.735K. These photons are referred to as the Cosmic Microwave Background radiation.

Top of the pageCosmic Microwave Background (ditto)

Theoretical perspective

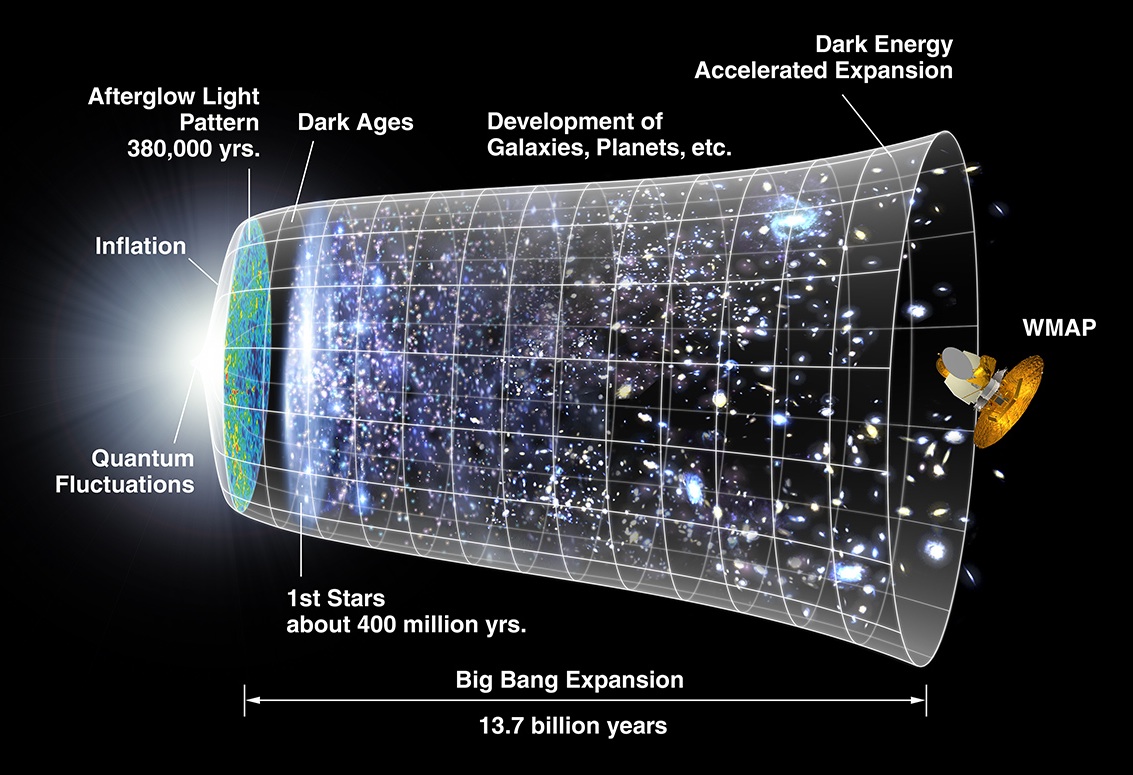

The simplified history of the Universe is depicted in the Figure (courtesy of the WMAP team), where the main events are shown as a function of time, with events, which are more recent shown more towards the right. The small thumbnail of a satellite depicts the WMAP satellite operating "here and now" and observing the CMB photons, which travelled from the afar and long back in time as marked by the greenish patch. The latter visualizes the Universe at the moment of recombination, as can be, and is, observed with aid of the CMB photons. As marked in the figure neither in its late nor early stage of the evolution the Universe was perfectly homegenous. In fact its current state is strikingly inhomogeneous with the matter clumped into galaxies, which themselves are typically grouped forming so called clusters of galaxies. To arrive at such a state the very early Universe could not have been completely homogeneous. The small perturbations of whole range of sizes needed to exist then and could have been generated as a result of the inflation - a little known, and rather mysterious process thought to have taken place as early as 10-35 seconds after the beginning of the Universe. It is marked in the figure at its very left, where the Universe is seen to increase its size the most rapidly. This rapid growth is yet another of the consequences of the inflation. As a result also the Cosmic Microwave Background photons are not perfectly homogeneous during the time of recombination and thus as seen today, they display some small level of variation from the overall isotropy. This so-called CMB anisotropy is hence directly related to the very early Universe inhomogeneities. In fact it encodes many of the properties of the inflation, which can be deciphered from the statistics of the observed patterns of the CMB anisotropy. These small deviations are the main focus of the present-day CMB research.

The extreme conditions of the Universe at the time of the inflation are well beyond the capabilities of the man-made laboratories, including the famed Large Hadron Collider (LHC) at CERN in Geneva, even once it gets to its projected best performance. The studies of the CMB anisotropies are therefore a unique, albeit not fully directly, way to study the laws of physics in the extreme circumstances. This demonstrates the importance of the CMB research in the context going beyond that of the cosmology only.

After the recombination the CMB photons flew nearly freely through the Universe. This is at least so until the temperature dropped down to roughly T = 20K. At that time the early objects in the Universe were already sufficiently abundant and emitted enough light to yet again (re)ionize the matter in the Universe. Some of the CMB photons will then interact with the ionized plasma once more then. Moreover, the photons kept on beng affected by the weak gravitational attraction of the matter inhomogeneities (galaxies, their clusters, etc), which were then emerging. This might have changed somewhat photons energy via so integrated Sachs-Wolfe or Rees-Sciama effects or their direction as a result of the gravitational lensing. On occasions they also might have interacted with the hot, ionized medium contained by the clusters - Sunyaev-Zel'dovich effects. All of those are very small effects but they did leave an imprint on the CMB photons, which therefore are a rich source of knowledge about the state of the post-recombination Universe.

Top of the pageExperimental perspective

The Cosmic Microwave Background radiation was discovered for the first time rather surrendipitiously by Penzias and Wilson in 1965. For the first definitive detecttion of the deviation from the isotropy in a form of the dipole one had to wait nearly 15 years. This is recognized to originate due to our motion with respect to the CMB background rest frame. The first detection of the primordial anisotropies came only in 1992 as a result of the observational campaign by the dedicated CMB satellite called COBE. This detection was then confirmed and elaborated on by an entire slew of the balloon-borne and ground-based observations, which started nearly routinely detect the anisotropy in the intensity of the CMB photons from 1998 onwards. This effort was crowned by the second NASA CMB satellite called WMAP, which launched in 2001, has been successfully collecting the data until today. In parallel, more subtle properties of the CMB anisotropies have been also observed. These are related to the polarization of the CMB photons - a much cleaner probe of the conditions of the very early Universe. To date the polarization measurements are still scarce and not very precise. This is due to a minute amplitude of the sought after signal, which is two to three orders of magnitude smaller than the CMB intensity anisotropies. The exploitation of the polarization signature still awaits the next generation of the CMB experiments, which will have to observe the Universe with an unprecedented number of detectors and do it for an extended period of time. The recently launched, European Planck satellite is expected to further our knowledge of the polarized properties of the CMB, however it will be still left to post-Planck observations to provide definitive clues about the inflation.

With technological develpments of the past decade the better, more sensitive, stable and efficient CMB observatories are being built and deployed world-wide. Some of them will soon resume their operations. They will utilize up to two orders of magnitude more detectors than yesteryear designs and will operate for up to a few years measuring the sky signal a few hundred times per second. This will unavoidably result in a unprecedent in size, but also, complexity data sets, posing a huge challenge for the effort of extracting the early Universe information from those observations.

Top of the pageComputational challenges in CMB data analysis

Modern CMB data analysis has proven to be a challenging and sophisticated task, requiring advanced processing techniques, numerical algorithms and methods, and their implementations. The reasons for that are many-fold.

- the CMB data sets are very heterogenous. This is because, at least for the so-called scanning experiments

(these are the main focus of the MIDAS project), the data directly obtained from the instrument have a form of a one-dimensional, time-ordered

data set. Those, so called time-ordered data contain however information about the two-dimensional map

of the sky. An estimation of this map is one of the goals of the CMB data analysis. From the theoretical models point of view, the major

information is contained in the so-called power spectrum of the CMB signal, which is a harmonic domain object.

The data analysis tools have to thus operate in all these three domain as well as in between them. The CMB data analysis requires therefore:

- one dimensional tools: FFTs, wavelet transforms, polynomial transforms, filtering tools, generalized least square solvers, etc.;

- two dimensional tools: spherical harmonic transforms, 2-dim wavelet transforms, etc.;

- linear algebra tools: iterative solvers, preconditioners, matrix decompositions (Cholesky, SVD, ...) and inversions (direct, Sherman-Woodbury-Morrison, "by-partition",...) for dense, dense-highly-structured (e.g., Toeplitz matrices) and sparse cases, etc.;

- non-linear tools: minimization/maximization routines (Newton-Raphson), adaptive fitting, etc.

- and many others, such as random number generators, sampling algorithms, image processing tools, compressed sensing, etc.

- The time domain measurements are very noisy with the sky signals buried in instrumental and photon noise. Moreover the instrumental noise is typically correlated on long time scales. This does not allow a simple divide and conquer approach as big segments of the data have to be processed simultanously. Given a rather limited memory per processor anticpated for the forthcoming supercomputers, that on its own calls for massive parallelization of the CMB data analysis codes. Moreover, the noise correlation pattern is unknown ahead of time and has to be determined from the data themselves. This has to be sufficiently precise to permit the best recover of the sky signals. The time-ordered data processing has to be highly accurate not to affect its cosmological content.

- The time domain measurements are usually contaminated by a priori unpredictable a priori levels of instrumental and other systematic effects, which have to be first detected in the presence of often dominant noise and later subtracted without compromising the cosmological and astrophysical sky signals.

- The measured sky signal is rarely ever just a CMB signal. In the contrary it is usually a mixture of multiple components, which differ from CMB either because have different frequency scaling, spatial or statistical properties. These have to be separated from the CMB signal.

- And the last but not least the CMB data sets are huge. The next generation of the experiments will observe the sky for up to a few years, using many thousands of detectors, each of which will sample the sky as many as hundred times per second. The forthcoming data sets, as registered by the instruments, will soon typically contain tens and hundreds of billion of measurements and reach more than a peta byte, when stored on a computer disk. And this is usually just a small fraction of the full data sets, which the data analysis has to cope with, and which in addition consists also of processed, but intermediate products.

Project goals and methodology

The increase in the sizes of the CMB data sets seems to follow the Moore's law, i.e., their sizes are doubling every two years, which is the same law which describes the increase of the power of available cutting edge supercomputers. The latter is expected to continue for the next years to come (meaning at least until the sillicon technology looses its steam). This suggests that significant computing power should be in principle available when it is needed. The missing piece is therefore the capability of the CMB community to exploit that power efficiently and wisely. In the realm of the supercomputing world that translates into availability of the high performance, massively parallel algorithms and software tools. This is precisely this crucial area which MIDAS project is designed to address.

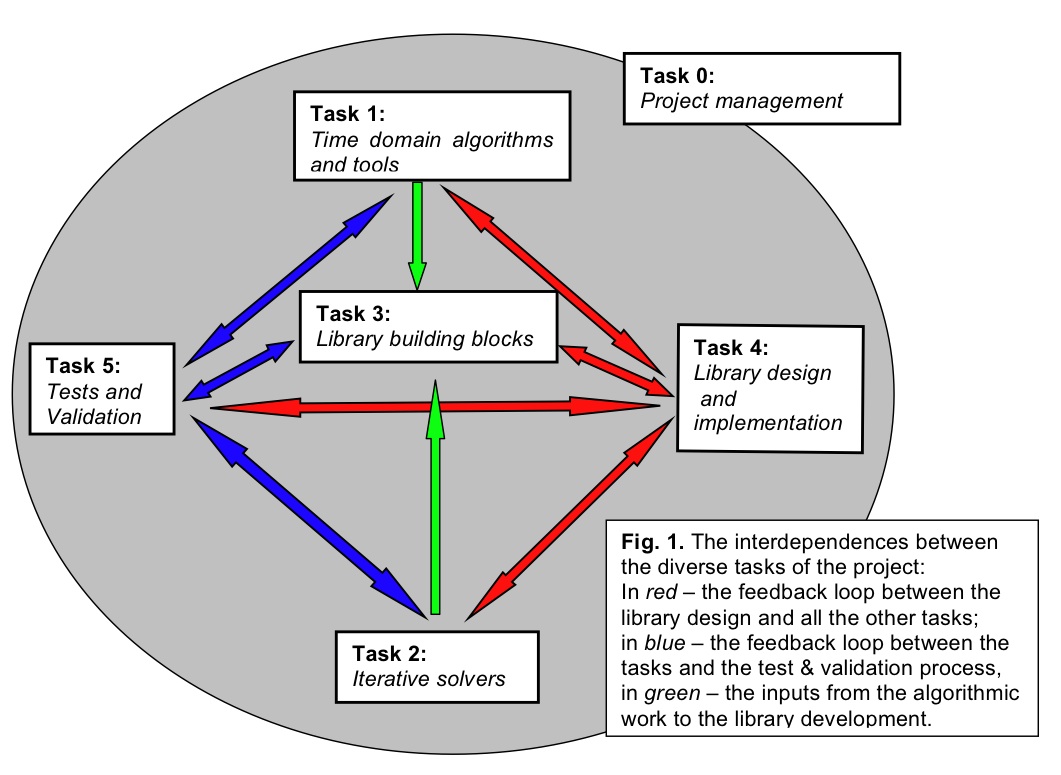

The MIDAS proposal defines five main tasks which will be in the focus of our work. These are:

- Time domain algorithms and tools;

- Iterative solvers;

- Library building blocks;

- Library design and implementation;

- Tests and validation.

- the physicists/cosmologists will help to define the essential characteristics of specific operations, which need to be performed as part of the CMB data analysis pipeline;

- the applied mathematicians will propose a mathematical solution to the problem;

- the computer scientists will find the best implementation of the algorithm for a given choice of the computer architectures

- the software engineers will work with the computer scientists to implement the algorithms in practice;

- the physicsts will then test and validate the implemented tools on simulated and actual data and provide a feedback for the next round of improvements and refinements.

The products of the project are predicted in all the aforementioned science areas. We expect a number of papers/reports to be written in the areas of computer science/signal processing on one hand and in the cosmology on the other. The single main product would be however the middle-layer numerical CMB data analysis library as mentioned above.

Top of the page